While some futurists and technology executives are predicting a blanket shift to fully autonomous civilian vehicles over the next two decades, in the case of the Army, autonomy is not viewed as a one-size-fits-all solution to future operations.

It’s not that DoD doesn’t have access to the technology. In fact, it could be argued that events like the Defense Advanced Research Projects Agency’s Grand Challenges in 2004, 2005 and 2007 established the technical foundation for many of today’s commercial driverless vehicle initiatives. (In the Grand Challenges, DARPA gave prizes to spur the development of autonomous vehicles.)

Moreover, the Army’s multidomain battlespace encompasses potential autonomous elements in the air, on the ground and even underground.

Consequently, rather than pursuing a blind embrace of unmanned or robotic technology, the Army’s unique role in national defense demands a different way of viewing autonomous technology and its possible contributions to warfighter effectiveness.

In the Air

With Army unmanned aircraft systems (UAS) having accumulated flight hours in the millions, and with a large percentage of those hours generated in combat operations, it’s natural to wonder about the service’s embrace of autonomy in future air operations.

However, “complete autonomy” is not likely to happen in Army aviation, Maj. Gen. William Gayler, commander of the U.S. Army Aviation Center of Excellence and Fort Rucker, Ala., said at an Association of the U.S. Army-sponsored aviation forum in September.

Instead, Gayler favors “supervised autonomy” for reasons ranging from ethical man-in-the-loop considerations to the simple fact that full autonomy is unlikely to work in contested environments.

So where does Army aviation plan to take autonomous technologies?

Many people who think about military autonomy tend to think of UAS operations that have proven invaluable over the past 20 years, according to Col. Thomas von Eschenbach, director of the Capability Development and Integration Directorate at the Aviation Center of Excellence.

He said the concept of “supervised autonomy” is important because it addresses “the paradigm that unmanned aircraft systems are not really unmanned. They are remotely piloted, because you have an operator on the other side.

“Many times, when we present our Army aviation modernization strategy to service leadership we are asked, ‘When are you going to go fully unmanned? When are you going to unman that helicopter?’ ” he said, adding that some narratives falsely assume aviators don’t want to “unman” things.

“But this isn’t about unmanning something,” he said, pointing to the need to “get to the true ‘why’ we want to do that.

“It isn’t about taking people out of a cockpit,” he continued. “It isn’t about removing the human from the system. It’s more about how we can get the human to focus on the truly important decisions in combat and how we can get the machine to operate in the more routine aspects of a system.”

Sliding Scale

Von Eschenbach offered a resulting “personal thesis” that “the future will require a selectable and sliding scale of the confluence between artificial intelligence

and human interaction depending on the mission and the human’s ability to generate situational awareness, spatial dexterity and contextual understanding.”

He asserted that removing the person from the equation “eliminates human cognition and sometimes those subconscious things that allow a human to decide. And you can’t do that when you’re remotely in a box being provided full-motion video.”

Supervised autonomy, by contrast, could allow multiple systems to make those decisions that can be done faster and more efficiently by those systems while allowing the human to be more effective in decisions and processes best performed by a person.

He noted that current helicopter designs have “some levels of autonomy there” in responses to flight controls or the ability of aircraft survivability equipment to detect and deploy countermeasures.

“If you are flying in a hostile environment and the enemy decides to shoot a missile at you, as soon as the helicopter detects that, today all it does is deploy countermeasures,” he said. “But in supervised autonomy it could deploy the countermeasure, reduce the engine heat signature—if it was a heat-seeking missile—and then potentially cue you for evasive maneuvers, still allowing the pilot some level of control. And based off the location of the missile, it could potentially send a message out to others to reroute around that hazard. That is what we want to work on as supervised autonomy. The human still is ultimately the decider, but the function allows this machine to be better and faster than a human and therefore letting the human now make a decision.”

Another argument for supervised autonomy involves the Army’s tactical battlespace.

“In a sterile kind of procedural system, autonomy is good,” von Eschenbach said. “But in a truly chaotic system, I think the human still is the best computer. So now you have my point about the sliding scale. Let the commander decide where along that scale that balance needs to be. On a very easy thing, maybe fully autonomous is good. In a highly complex chaotic environment, we need a lot of human control with the machine doing the mundane kind of things.”

‘Optional Manning’

He added that a sliding scale of autonomy also lends itself to the idea of “optional manning” of an aircraft, where autonomy could be used in thoroughly controlled environments, but a person would be used in more dynamic combat settings.

Acknowledging the likelihood the Army will make “incremental progress on supervised autonomy” with its current rotary-wing, fixed-wing and UAS platforms, he noted those platforms lack some “cornerstone capabilities” required for full implementation.

“Future vertical lift is not only unique in that it is potentially a truly different way of flying,” he said. “It’s the first ‘clean-sheet design’ the aviation branch has had since it became a branch in 1983. And you don’t get a true game-changing opportunity unless you do it with a clean-sheet design. Now, that doesn’t mean we can’t continue to increase our levels of autonomy in our other systems. But you don’t get that 10-times kind of capability increase until you get with future vertical lift.”

Building the Foundation

Col. William T. Nuckols Jr., director of the Mounted Requirements Division in the Capabilities Development and Integration Directorate at the U.S. Army Maneuver Center of Excellence at Fort Benning, Ga., outlined a foundation for applying autonomous technologies, beginning with building on the Army Operating Concept, the supplemental Combat Vehicle Modernization Strategy and the March 2017 Robotic and Autonomous Systems Strategy.

Nuckols said those foundational documents support the Maneuver Force Modernization Strategy. The strategy begins with an understanding of the operational environment and potential changes over the next two decades, followed by an assessment of whether current brigade combat team organizational and operational concepts match up, identification of any “gaps” and then development of requirements to drive necessary materiel changes.

A key component of the strategy is the Combat Vehicle Modernization Strategy–Execution, which he described as “fleshing out” the Combat Vehicle Modernization Strategy, originally approved in fall 2016.

Capabilities Prioritized

“We’ve prioritized some of the capabilities described in the [Combat Vehicle Modernization Strategy] and talked about how we’re going to address them,” he said, highlighting the Maneuver Center of Excellence commanding general’s “critical enabling technology” focus areas.

One of those capabilities is the Army’s new Maneuver Robotics and Autonomous Strategy (MRAS).

“We believe that MRAS will do those three things for us: expand situational awareness, enhance force protection and increase lethality,” he said, adding that other strategy goals range from reducing soldier physical and cognitive load to performing many of the dangerous, dirty and dull jobs now performed by soldiers.

In parallel with development of these doctrinal underpinnings, the last several years have seen the Army support and conduct numerous demonstrations, experiments and activities involving robotic platforms.

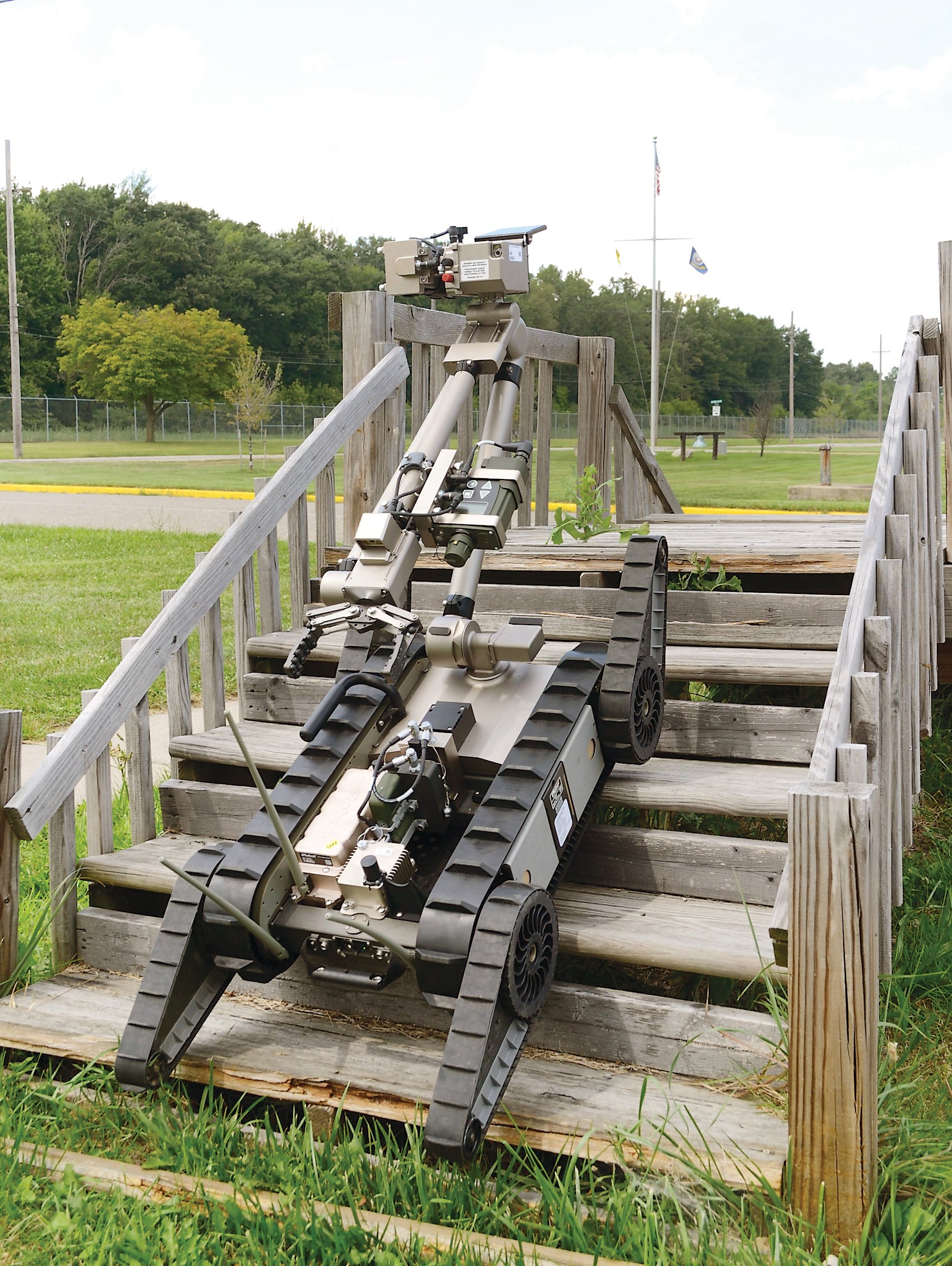

For example, robots provided the 2nd Infantry Division’s 23rd Chemical Battalion with a remote, first-look capability into underground facilities in South Korea. “These robots are one-of-a-kind and filled a critical gap for soldiers on the front lines,” said Lt. Col. Mark Meeker, field assistance in science and technology adviser assigned to U.S. Forces Korea.

No Agreement on Definition

Asked whether such investigations have reflected an evolution toward more autonomy, Nuckols characterized the answer as “very complex,” explaining, “We can’t even get everybody—not just within the Army but across any interested parties—to agree on exactly what autonomy means. It has a slightly different definition depending on whom you ask.”

In the case of autonomous fighting-machine concepts, for example, he pointed to the policy implications of DoD Directive 3000.09: Autonomy in Weapon Systems and its “man-in-the- loop” mandates surrounding lethal decisions.

“But for nonlethal applications, in my opinion, the more autonomy the better,” he said. “Let me give you an example. If we are looking at swarming behavior from small aerial or ground robotic platforms or systems, and I give it the task of going out to do a zone reconnaissance over a specific area, the more autonomy that swarm has, the better it is for me as a soldier, commander or leader in the field.

“That’s because I can launch that swarm, and I can focus on other tasks as long as I have confidence that it’s going to do its task and get to me the information that I need,” he said.

“Now, some would quibble with me and say that’s not autonomous because I told it what to do,” he continued. “I would argue that is autonomy. It has a task and a mission and it goes and it conducts it autonomously.”

Nuckols said another area of autonomous disagreement involves artificial intelligence (AI).

“Some say we have got to have the technology to enable autonomy,” he said. “But for swarming, for example, I would argue that’s not true AI. It’s just very complex algorithms and heuristics and certain rules that are built into the software that guides the behavior of that robotic platform.”

That said, Nuckols acknowledged a service desire for more AI.

“We want more in our manned combat systems to help with decision-making, from command-control decisions even down to fire-control decisions,” he said. “If we have AI better integrated into our fighting vehicles, it can greatly help the crew in identifying, classifying and prioritizing targets. That doesn’t mean it’s not the crew engaging the targets. But AI assists them with that cognitive aspect of handling multiple target engagements almost simultaneously. So could you argue that’s autonomy? I think to a degree it is. But it’s assisted autonomy. And I would argue that more autonomy will actually drive down overall long-term system cost.”

To support his assertion, Nuckols noted that current Maneuver Center of Excellence thinking about a future Robotic Combat Vehicle involves two RCVs controlled by a single control vehicle carrying six soldiers.

“We haven’t started building anything yet, but this is our start point for our mission analysis planning. The control vehicle might be an [Armored Multi-Purpose Vehicle] with a two-man crew and four robotic controllers in the back, two controllers for each RCV. That’s six soldiers controlling three vehicles. Well, that’s progress. But it’s just a start point. I think within a few years—not a handful but probably within 10 to 15 years—we will successfully get that down to one operator for four RCVs,” he said.

“As your autonomy and your AI increases, the level of supervision required by humans is decreased,” he said. “So we could get to the point where one soldier-controller is making lethal decisions for those RCVs, but the RCVs are doing the rest of it on their own. That’s theoretical right now, but I’ll tell you, I think that’s the future.”

Future Requirements

While it is impossible to predict future Army requirements with 100 percent certainty, it is possible to identify some of the general trends.

Now-retired Gen. David G. Perkins, then commander of the U.S. Army Training and Doctrine Command, said, “From an autonomous point of view, I like to approach it from a strategy versus a specific plan.”

Instead of identifying a specific robot, Perkins said future Army planners “ought to look at entire mission sets we have and say, ‘Maybe we ought to move away from a manned approach to that of an autonomous one.’ For example: Obstacles. If you have to breach a minefield or take down wires, why would we ever want to do that with manned systems at all?”

“I want to get away from, let’s have a robot pick that up or let’s have a robot do that, versus let’s look at entire mission sets and say they ought to be autonomous.

“If you look at subterranean operations, which is a growth industry, why would the first thing ever in a tunnel be a soldier? Why would we want to do that? That should be something that you look at an autonomous solution to perform.”

Perkins noted that a likely spinoff of autonomous technology is the “optional manning” concept.

“As we are looking toward systems, one of the first-order principles should be, at some point in time every piece of ground equipment and maybe air equipment can be optionally manned,” he said. “Maybe for some mission I want it to be manned and maybe the next mission the commander wants it to be unmanned.”

He offered the example of a manned helicopter delivering an infantry squad and then following that with unmanned or autonomous ammunition or fuel deliveries.

“So what I get interested in at this level is, what mission sets do we want to have autonomous? How do we employ optionally manned systems? And what is the decision criteria between whether that should be manned or unmanned? Then we look at technology maturity. And we do that from the commander’s point of view. What he or she is focusing on is the decision to man or unman a particular mission. But the basics of the capability are pretty much the same,” Perkins said.